Imagine a Grand Hotel Lobby: The Art of Seamlessly Managing Crowds

The grand hotel lobby was buzzing with activity. Guests are arriving for check-in, others are ordering room service, some are asking for directions to local attractions, while a few are trying to reserve tables at the in-house restaurant. Despite the flurry of activity, the hotel staff manages everything seamlessly. There’s no chaos, just an elegant dance of tasks being assigned and completed in harmony. How does this happen?

The secret lies in a well-orchestrated system, where every staff member knows their role. There’s a front desk that delegates tasks, concierges who handle requests, and bellhops ready to carry luggage — each working efficiently to serve multiple guests at once without overwhelming anyone.

This is precisely how Nginx handles thousands — or even millions — of requests concurrently. Much like the hotel’s staff, Nginx is a highly efficient traffic manager, designed to ensure no request waits in line for long. It assigns tasks to the right components, handles multiple visitors simultaneously, and ensures that your web experience is smooth and responsive.

In this blog, we’ll dive deeper into the architecture of Nginx — the unsung hero behind the seamless delivery of web pages. From its use of asynchronous, non-blocking event-driven processing to the way it optimizes system resources, we’ll uncover the genius behind how Nginx serves so many “guests” with such grace.

Why is high concurrency important?

One of the biggest challenges for a website architect has always been concurrency. Since the beginning of web services, the level of concurrency has been continuously growing. It’s not uncommon for a popular website to serve hundreds of thousands and even millions of simultaneous users. A decade ago, the major cause of concurrency was slow clients — users with ADSL or dial-up connections. Nowadays, concurrency is caused by a combination of mobile clients and newer application architectures, which are typically based on maintaining a persistent connection that allows the client to be updated with news, tweets, friend feeds, and so on. Another important factor contributing to increased concurrency is the changed behavior of modern browsers, which open four to six simultaneous connections to a website to improve page load speed.

To illustrate the problem with slow clients, imagine a simple Apache-based web server that produces a relatively short 100 KB response — a web page with text or an image. It can be merely a fraction of a second to generate or retrieve this page, but it takes 10 seconds to transmit it to a client with a bandwidth of 80 kbps (10 KB/s). Essentially, the web server would relatively quickly pull 100 KB of content, and then it would be busy for 10 seconds, slowly sending this content to the client before freeing its connection. Now, imagine that you have 1,000 simultaneously connected clients who have requested similar content. If only 1 MB of additional memory is allocated per client, it would result in 1000 MB (about 1 GB) of extra memory devoted to serving just 1000 clients 100 KB of content. In reality, a typical web server based on Apache commonly allocates more than 1 MB of additional memory per connection, and regrettably, tens of kbps is still often the effective speed of mobile communications. Although the situation with sending content to a slow client might be, to some extent, improved by increasing the size of the operating system kernel socket buffers, it’s not a general solution to the problem and can have undesirable side effects.

With persistent connections, the problem of handling concurrency is even more pronounced because, to avoid the latency associated with establishing new HTTP connections, clients would stay connected, and for each connected client, there’s a certain amount of memory allocated by the web server.

What fundamental design principles differentiate nginx from older web servers like Apache?

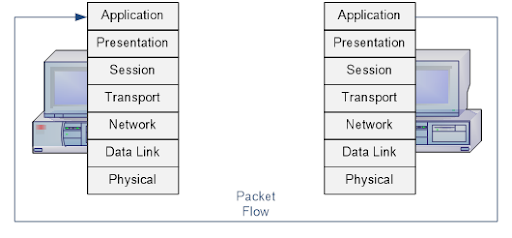

Connection Handling Model

Apache (Traditional Model): Older web server architectures like Apache generally employ a process- or thread-based model, where a separate process or thread is spawned for each new connection. This approach was suitable for the Internet’s state in the early 1990s, when a website typically ran on a standalone physical server.

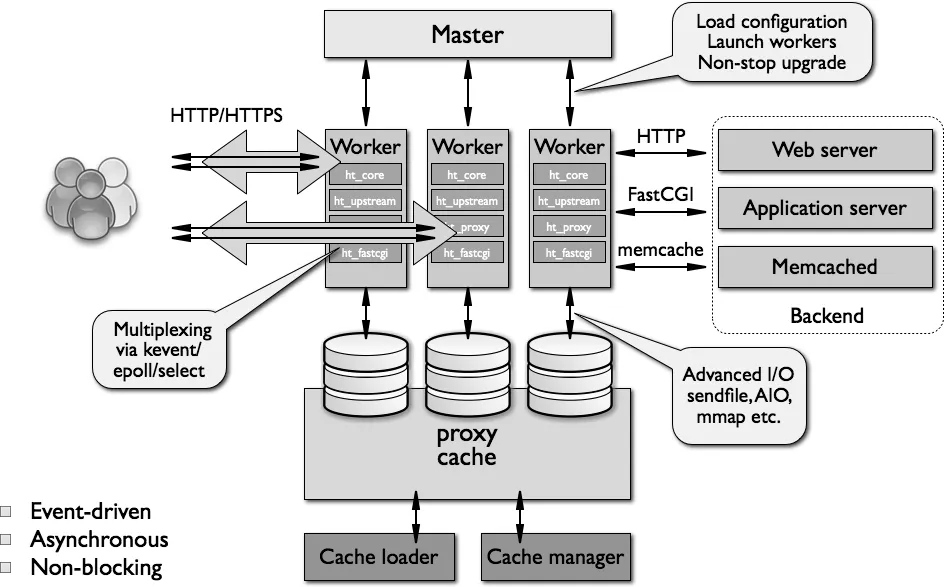

Nginx (Event-Driven Model): In contrast, Nginx was built with an event-based, asynchronous, single-threaded, non-blocking architecture. It does not spawn a new process or thread for every connection or web page request. Instead, it uses multiplexing and event notifications heavily. Connections are processed in a highly efficient run-loop within a limited number of single-threaded processes called workers.

Resource Utilization (Memory and CPU)

Apache: The traditional model of spawning a process or thread per connection is very inefficient in terms of memory and CPU consumption. Allocating a new runtime environment (heap, stack memory, execution context) and the CPU time spent creating these items can lead to poor performance due to thread thrashing on excessive context switching. For example, serving 1,000 slow clients requesting 100 KB of content could result in 1 GB of extra memory devoted to connections, as typical Apache-based servers often allocate more than 1 MB per connection.

nginx: Its event-driven architecture means that memory usage is very conservative and extremely efficient, and it conserves CPU cycles because there is no continuous create-destroy pattern for processes or threads. Even as the load increases, memory and CPU usage remain manageable. nginx can deliver tens of thousands of concurrent connections on typical hardware.

Scalability

Apache: Apache’s architecture was not suitable for nonlinear scalability with a growing number of web services and users. While it provided a solid foundation and became a general-purpose web server with many features, this universality came at the cost of less scalability due to increased CPU and memory usage per connection.

nginx: It was designed specifically for nonlinear scalability in both the number of simultaneous connections and requests per second. nginx addresses the “C10K problem” (handling 10,000 simultaneous clients) by being able to scale well across multiple CPU cores, preventing thread thrashing and lock-ups. Workload is shared across several workers, leading to more efficient server resource utilization.

Overview of nginx Architecture

Code Structure

The nginx worker code includes the core and the functional modules. The core of nginx is responsible for maintaining a tight run-loop and executing appropriate sections of modules’ code on each stage of request processing. Modules constitute most of the presentation and application layer functionality. Modules read from and write to the network and storage, transform content, do outbound filtering, apply server-side include actions, and pass the requests to the upstream servers when proxying is activated.

While handling a variety of actions associated with accepting, processing, and managing network connections and content retrieval, nginx uses event notification mechanisms and several disk I/O performance enhancements in Linux, Solaris, and BSD-based operating systems, like kqueue, epoll, and event ports. The goal is to provide as many hints to the operating system as possible, in regards to obtaining timely asynchronous feedback for inbound and outbound traffic, disk operations, reading from or writing to sockets, timeouts, and so on. The usage of different methods for multiplexing and advanced I/O operations is heavily optimized for every Unix-based operating system nginx runs.

Workers Model

As previously mentioned, nginx doesn’t spawn a process or thread for every connection. Instead, worker processes accept new requests from a shared “listen” socket and execute a highly efficient run-loop inside each worker to process thousands of connections per worker. There’s no specialized arbitration or distribution of connections worker's in nginx; this work is done by the OS kernel mechanisms. Upon startup, an initial set of listening sockets is created. worker’sThen continuously accept, read from, and write to the sockets while processing HTTP requests and responses.

The run-loop is the most complicated part of the nginx worker code. It includes comprehensive inner calls and relies heavily on the idea of asynchronous task handling. Asynchronous operations are implemented through modularity, event notifications, extensive use of callback functions, and fine-tuned timers. Overall, the key principle is to be as non-blocking as possible. The only situation where nginx can still block is when there’s not enough disk storage performance for a worker process.

Nginx Process Roles

Nginx runs several processes in memory, each with specific roles, which contributes to its efficient, high-performance architecture.

Here are the fundamental design principles differentiating nginx from older web servers like Apache, specifically in terms of its process roles:

Single Master Process:

- There is a single master process in nginx.

- This master process runs as the root user.

- Its responsibilities include:

- Reading and validating the configuration.

- Creating, binding, and closing sockets.

- Starting, terminating, and maintaining the configured number of worker processes.

- Reconfiguring without service interruption.Controlling non-stop binary upgrades (starting new binary and rolling back if necessary).

- Re-opening log files.

- Compiling embedded Perl scripts.

Worker Processes:

- Nginx uses several worker processes.

- These worker processes run as an unprivileged user.

- All worker processes are single-threaded in version 1.x of nginx.

- They accept, handle, and process connections from clients.

- Worker processes provide reverse proxying and filtering functionality.

- They perform almost everything else that nginx is capable of.

- System administrators should monitor worker processes as they reflect the day-to-day operations of the web server.

- Worker processes accept new requests from a shared “listen” socket.

- They execute a highly efficient run-loop inside each worker to process thousands of connections per worker.

- Nginx scales well across multiple cores by spawning several workers, typically one separate worker per core, allowing full utilization of multicore architectures and preventing thread thrashing and lock-ups.

Special Purpose Processes:

In addition to the master and worker processes, nginx includes a couple of special-purpose processes: a cache loader and a cache manager.

- These processes also run as an unprivileged user.

Cache Loader Process:

- Responsible for checking on-disk cache items.

- Populating nginx’s in-memory database with cache metadata.

- It traverses directories, checks cache content metadata, updates shared memory entries, and then exits once everything is ready.

Cache Manager Process:

- Primarily responsible for cache expiration and invalidation.

- It remains in memory during normal nginx operation and is restarted by the master process if it fails.

All nginx processes primarily use shared-memory mechanisms for inter-process communication. This design, based on an event-driven, asynchronous, single-threaded, non-blocking architecture, allows nginx to handle many thousands of concurrent connections and requests per second while maintaining manageable memory and CPU usage, even under increasing load.

How nginx modules are invoked:

Nginx modules are invoked through a highly customizable system primarily based on callbacks using pointers to executable functions. This mechanism is integral to nginx’s event-driven, asynchronous, and non-blocking architecture.

Here’s a breakdown of how nginx modules are invoked, drawing from the sources:

- Callback Mechanism: The fundamental way modules are invoked is through a series of callbacks using pointers to the executable functions. This design allows for extreme customization of module invocation.

- Integration with the Run-Loop: The core of nginx is responsible for maintaining a tight run-loop within its worker processes. Within this run-loop, nginx executes appropriate sections of modules’ code at each stage of request processing. This run-loop involves processing events with OS-specific mechanisms (like epoll or kqueue), accepting events, dispatching relevant actions, processing request headers and bodies, generating response content, finalizing requests, and re-initializing timers and events.

- Pipeline/Chain of Modules: Internally, nginx processes connections through a pipeline, or chain, of modules. This means that for nearly every operation, there is a specific module performing the relevant work. Modules such as `http` and `mail` provide an additional layer of abstraction and are responsible for maintaining the correct order of calls to the respective functional modules.

- Phases and Handlers: When nginx handles an HTTP request, it passes it through a number of processing phases. At each phase, there are handlers to call. The nginx core chooses the appropriate phase handler based on the configured location matching the request. Phase handlers process a request, generate an appropriate response, and send the header and body. They are attached to locations defined in the configuration file.

Examples of phases include server rewrite, location, access control, try_files, and log phases.

- Filters: After a phase handler completes its task, it passes its output buffer to the first filter in a chain. Filters then manipulate the output produced by a handler. A filter gets called, starts working, and then calls the next filter in the chain. Filters do not have to wait for the previous filter to finish; they can begin their work as soon as input is available, much like a Unix pipeline. There are distinct header filters and body filters, with nginx feeding the header and body separately.

- Upstream and Load Balancer Modules: Upstream modules, which act as reverse proxies, invoke callbacks when the upstream server is ready to be written to or read from. These callbacks handle tasks like crafting request buffers, processing responses, and aborting requests. Load balancer modules attach to the proxy_pass handler (which is an upstream-related content handler) to choose an eligible upstream server when multiple are configured.

- Module Attachment Points: Modules can attach at numerous specific points throughout the nginx lifecycle and request processing, including:

- Before the configuration file is read.

- For each configuration directive for a location or server.

- When the main, server, or location configurations are initialized or merged.

- When the master or worker processes start or exit.

- When handling a client request.

- When filtering the response header and body.

- When picking, initiating, or re-initiating a request to an upstream server.

- When processing the response from an upstream server.

- When finishing an interaction with an upstream server.

It is important to note that, currently, nginx does not support dynamically loaded modules; rather, modules are compiled along with the core at the build stage, though support for loadable modules is planned for future major releases.

Conclusion

No more for today. Let your brain have a coffee and savor the silence — it’s well deserved. ☕